Humanising Autonomy, the London-based AI startup that’s working to ensure our safety around self-driving cars, raised $5.3 million in funding to further commercialise its technological platform.

“Our vision has always been to set the global standard for how autonomous systems interact with people,” said Maya Pindeus, CEO of Humanising Autonomy, as reported by Eureka magazine. “We now have the resources to rapidly scale our company and are excited to expand the reach of our technology across all levels of automation and increase our deployments around the world.”

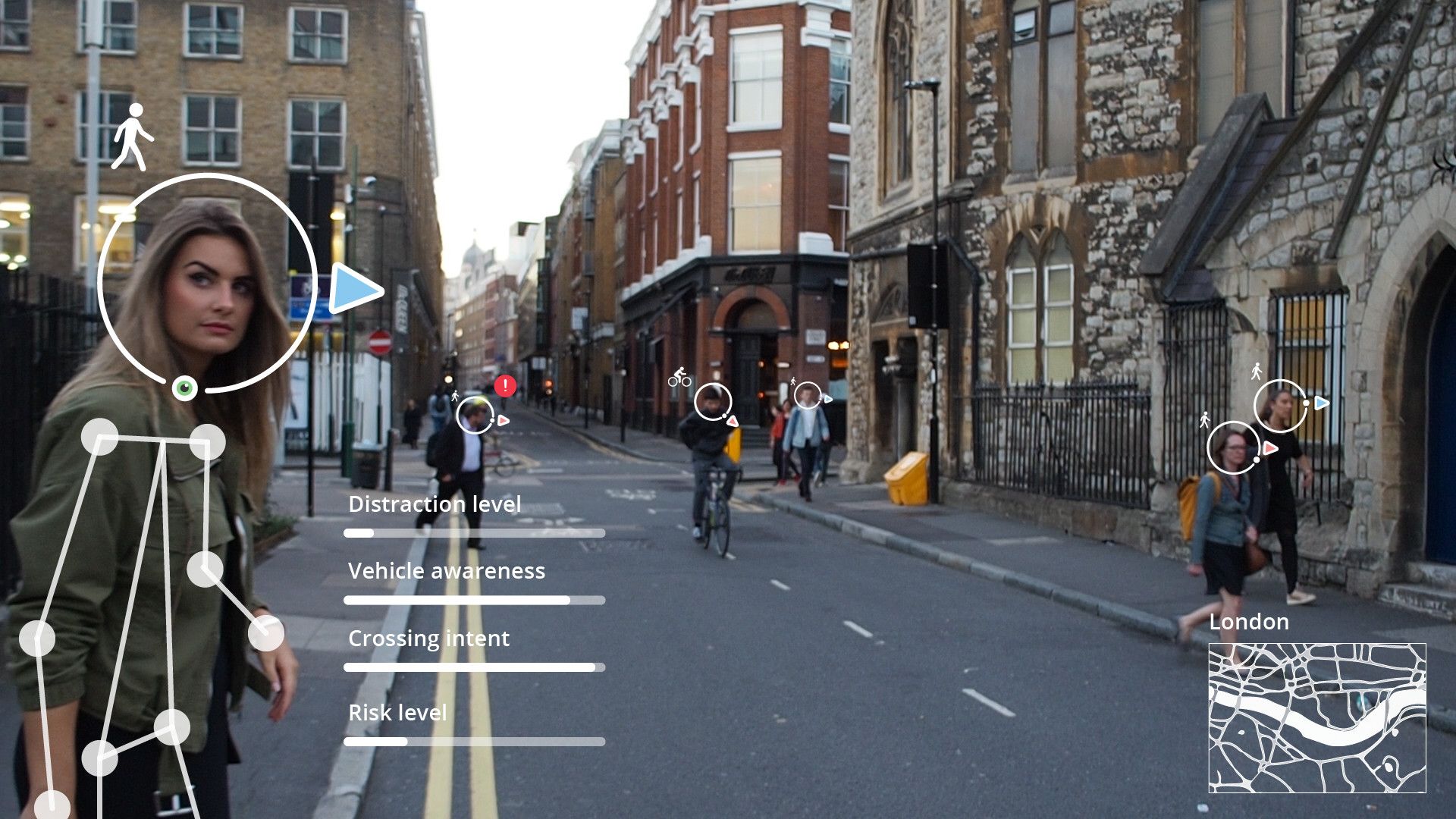

The company’s machine learning algorithms can recognise over 150 behaviours to determine whether a pedestrian is “risky” or “distracted.” By gathering data from a variety of sources across different cities throughout the world—including CCTV cameras, dash cams with varying resolutions, autonomous vehicle sensors and data partnerships with various institutions—the modules can adjust to different environments and climates.

“Cars need to understand the full breadth of human behaviour before they’re ever going to be implemented into urban environments,” Leslie Nooteboom, co-founder of Humanising Autonomy, told The Evening Standard in November 2018. “The current technology is able to understand whether something is a pedestrian and not a lamp post, and where that pedestrian is moving, framing them as a box. We’re looking inside that box to see what the person is doing, where they’re looking, are they aware of the car, are they on the phone or running.”

As a result, the startup’s technology notices danger over two seconds faster than a human can. It also accounts for differences in road, pedestrian and cycling behaviours throughout the world.

“It pays attention to the subtle, nuanced motion and body language of pedestrians and cyclists,” Pindeus told The Evening Standard in June 2019. “Tests found that in central London, where there are many more pedestrians and cyclists on the street, they are less likely to listen to the rules.”

Pindeus explained that the modules have been designed to adapt to different processing requirements.

“Our software will run on a standard GPU [graphics processing unit] when we integrate with level 4 or 5 vehicles, but then we work with aftermarket, retrofitting applications that don’t have as much power available, but the models still work with that,” she explained to TechCrunch. “So, we can also work across levels of automation.”

As fully autonomous cars will not hit the market en masse for quite some time, this allows the technology to be used in current vehicles to, for instance, inform drivers that a danger is behind the car or activate automatic braking earlier.